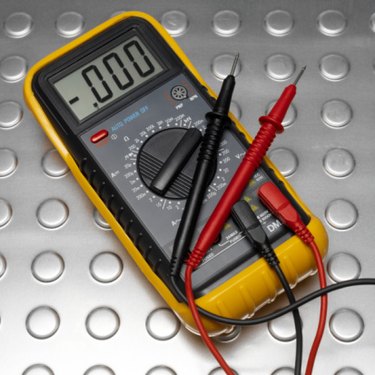

Electronics is the study of electrical circuits, which generally consist of power sources, wires and other integrated electrical components. A number of diagnostic tools are important in the commissioning or testing of electrical circuits. These tools include devices such as a voltmeter, ammeter or combined multimeter. These devices are usually calibrated by the manufacturer before they are shipped, but you may need to calibrate some of the cheaper varieties yourself.

Step 1

Connect the two terminals of the voltage source to either side of the 1 kOhm resistor.

Video of the Day

Step 2

Connect the two terminals of the ammeter across the resistor, or in parallel. This will allow the current flowing the resistor to be determined.

Step 3

Switch on the voltage supply, and set it to 1 V.

Step 4

Calculate the expected value of current using Ohm's law. Ohm's law states V=IR, where V is the voltage, I is the current and R is the resistance. In this case, the expected current is I=V/R and is equal to 1 milliamp, or mA. Compare this with the measured value shown on the ammeter. If the values are different, adjust the calibration knob on the ammeter to match 1mA.